Radiology Report Generation Using CT and MRI Images

Radiology Report Generation Using CT and MRI Images

Radiology Report Generation Using CT and MRI Images

Abstract

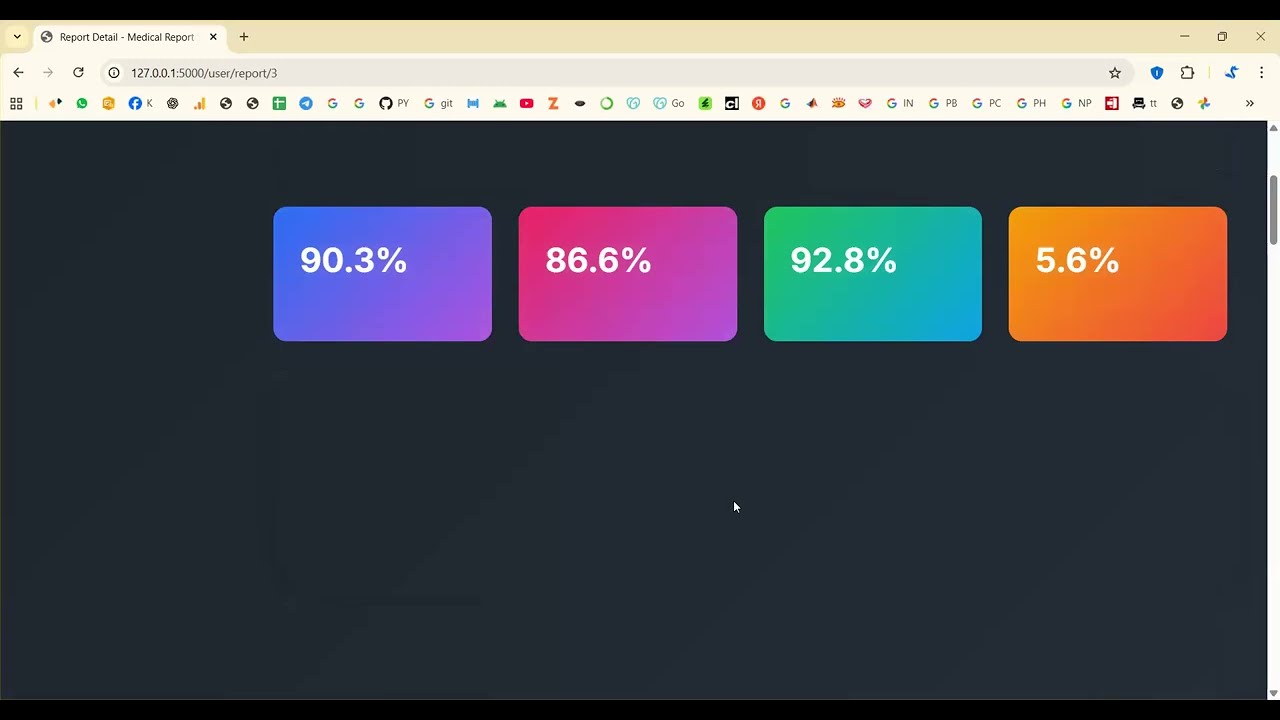

Radiology Report Generation using CT and MRI images focuses on automating the process of converting medical imaging data into accurate, structured, and clinically meaningful diagnostic reports using artificial intelligence. Traditional radiology reporting is time-consuming and depends heavily on the expertise of radiologists, which can lead to variability in interpretation and reporting quality. By leveraging deep learning models such as Convolutional Neural Networks (CNNs) for image feature extraction and Natural Language Processing (NLP) techniques for report generation, the proposed system aims to assist radiologists by generating preliminary diagnostic reports. This approach improves efficiency, reduces workload, enhances diagnostic consistency, and supports faster clinical decision-making while maintaining high accuracy and reliability.

Existing System

In the existing system, radiology reports are generated manually by radiologists after visually analyzing CT and MRI scans. This process requires significant time, expertise, and concentration, especially when dealing with large volumes of imaging data. The interpretation is highly dependent on individual experience, which can result in inter-observer variability and inconsistencies in report structure and terminology. Additionally, the growing demand for medical imaging has increased the workload on radiologists, leading to delays in diagnosis and increased chances of human error. Existing Computer-Aided Diagnosis (CAD) tools mainly assist in highlighting suspicious regions but do not provide complete, structured radiology reports.

Proposed System

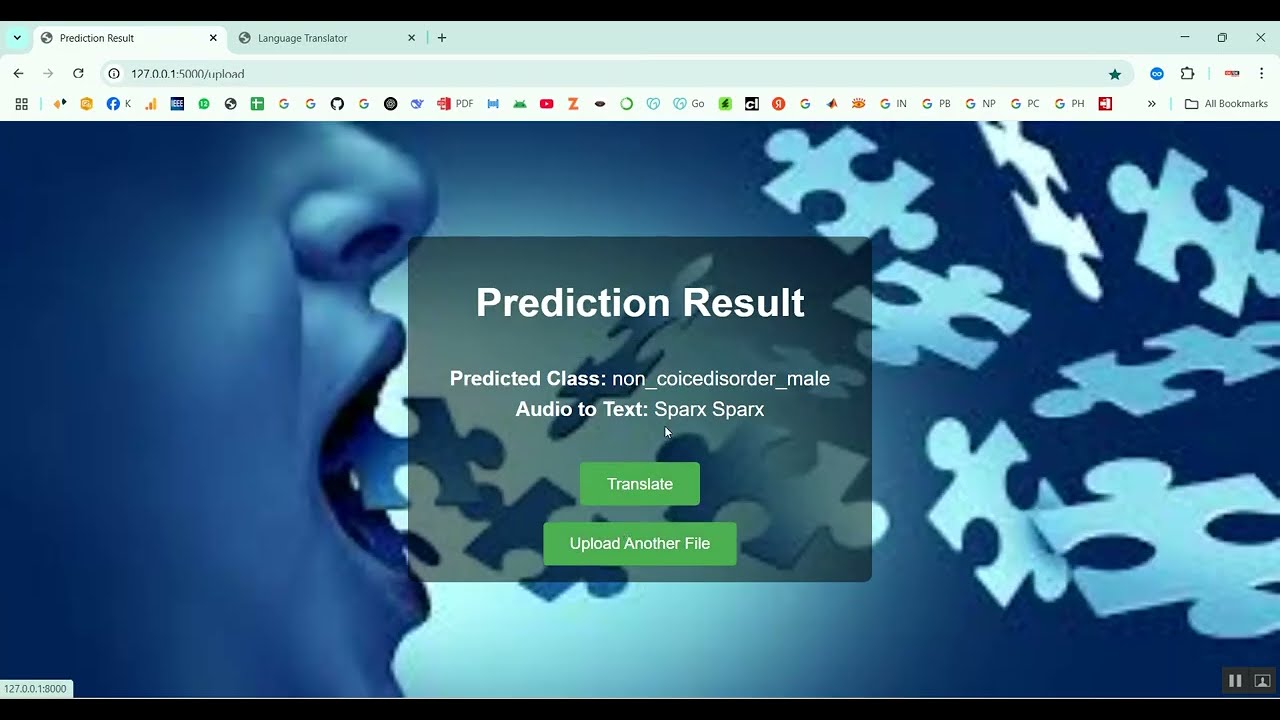

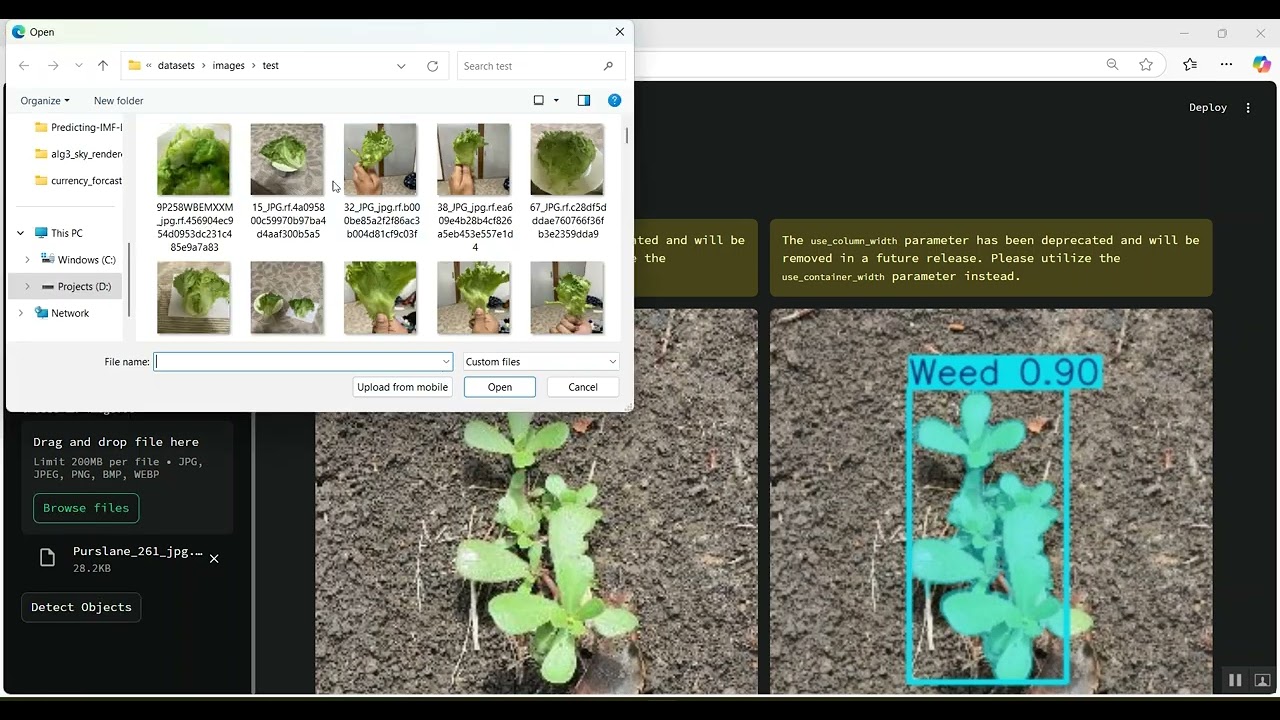

The proposed system introduces an AI-powered automated radiology report generation framework using CT and MRI images. It integrates deep learning-based image analysis with NLP-based report synthesis. CNN-based models (such as ResNet, DenseNet, or Vision Transformers) are used to extract meaningful anatomical and pathological features from medical images. These features are then processed by sequence-to-sequence or transformer-based language models to generate structured and standardized radiology reports. The system can identify abnormalities, localize affected regions, and describe findings in clinically relevant language. It also supports integration with hospital PACS/RIS systems, enabling seamless workflow enhancement. By acting as a decision-support tool, the proposed system improves reporting speed, consistency, and diagnostic accuracy while assisting radiologists rather than replacing them.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0